Parsedom

- August 7, 2025

- Automation

n8n in a Nutshell

n8n in a Nutshell

n8n is a free, open-source automation tool built on Node.js. It lets you create visual workflows to automate tasks across 400+ services like Gmail, Google Sheets, and APIs. You can also add custom logic using JavaScript. n8n can be self-hosted or run in the cloud, making it a flexible solution for building powerful automations without code.

What is Tweet Sentiment Analysis?

What is Tweet Sentiment Analysis?

Tweet Sentiment refers to the underlying emotion or opinion expressed in a tweet. It can be positive, negative, or neutral, helping businesses and individuals understand public feelings and reactions about a topic, brand, or product on X (formerly Twitter).

By analyzing tweet sentiment, you can gain valuable insights into customer satisfaction, brand reputation, and trending opinions — all automatically and in real-time using tools like n8n combined with AI-powered sentiment models.

Effortless Tweet Sentiment Analysis with

n8n & GPT API

Effortless Tweet Sentiment Analysis with

n8n & GPT API

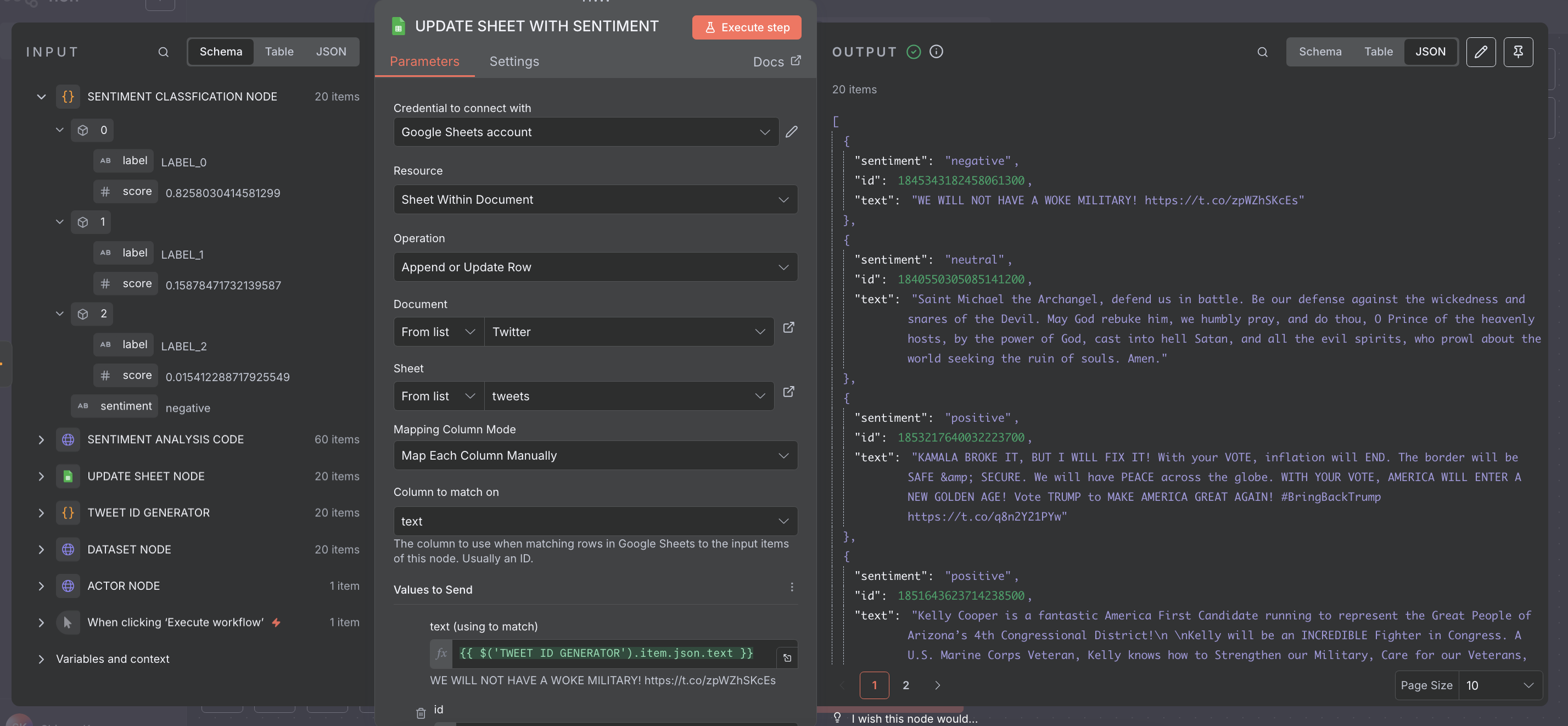

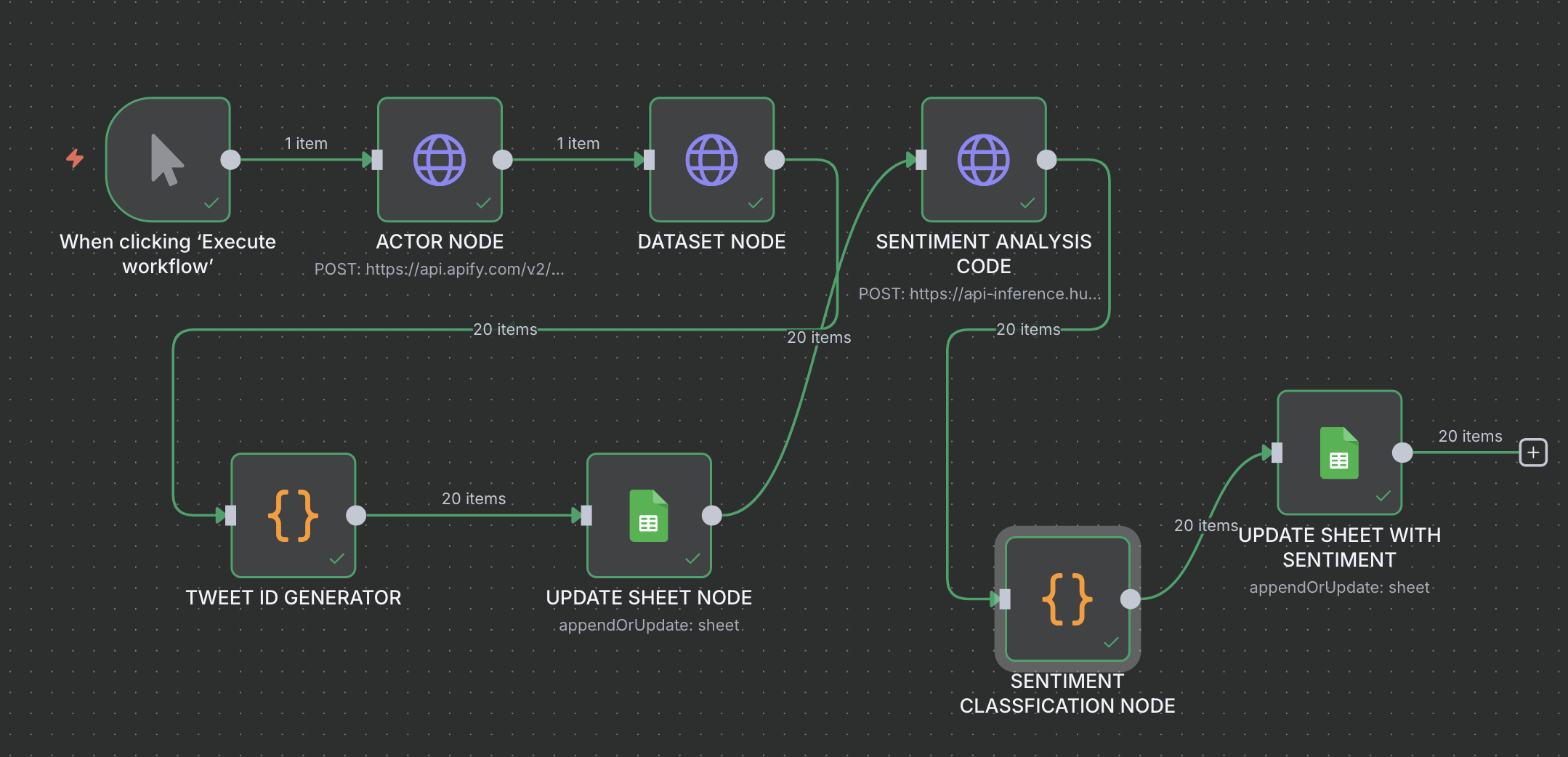

Workflow Steps

Don’t be overwhelmed! The steps are explained clearly in the sections below, with step-by-step guidance

- Run Apify Tweet Scraper Actor via HTTP node

- Get

datasetIdfrom actor run - Fetch dataset using

datasetIdvia another HTTP request - Use Code node to assign random unique IDs to tweets

- Add tweets to Google Sheet

- Use Hugging Face model cardiffnlp/twitter-roberta-base-sentiment to analyze sentiment

- Use Code node to categorize the sentiment as Positive, Negative, or Neutral

- Update Google Sheet with the final sentiment results

Note: The link to the model and the apify actor used is mentioned at the bottom of the blog for easy reference.

This workflow automates tweet sentiment analysis to help track product feedback and public perception. It starts by running an Apify actor to scrape tweets based on any keyword, product name, or Twitter handle. The tweets are fetched using the dataset ID, cleaned, and assigned a unique random ID using a Code node. All tweets are then logged into a Google Sheet. The Hugging Face model cardiffnlp/twitter-roberta-base-sentiment analyzes sentiment, and a Code node categorizes it as Positive, Negative, or Neutral. Finally, everything — from the actual tweet to its sentiment — is updated in the Google Sheet for easy review.

A Real-World Problem, Solved With Automation

A client wanted to monitor public opinion about their product on Twitter — how people were reacting, what kind of reviews they were getting, whether the buzz was positive or negative, and how much hype their product was really generating.

Manually checking tweets, copying them into sheets, and interpreting tone was slow, inefficient, and highly error-prone.

We built a fully automated solution in n8n — it scrapes live tweets about the product, classifies the sentiment using Hugging Face, and logs everything to Google Sheets in real time. Whether it’s praise, criticism, or just neutral buzz, the client now gets a clear snapshot of how their product is being received — without lifting a finger.

A process that once demanded constant attention is now smart, automated, and insightful.

Prerequisites

- Set up and connect your Google Sheets API credentials in n8n. This will allow the workflow to read from and write to your target spreadsheet.

- Have a valid Hugging Face API token for accessing the sentiment analysis model. You can use the model

cardiffnlp/twitter-roberta-base-sentiment. - Set your Apify API token in n8n to run a tweet scraping actor. You can use any actor you prefer, or follow along with this one:

kaitoeasyapi/twitter-x-data-tweet-scraper-pay-per-result-cheapest.

How We Built This Automation

How We Built This Automation

- Method:

POST - URL:

https://api.apify.com/v2/acts/kaitoeasyapi~twitter-x-data-tweet-scraper-pay-per-result-cheapest/runs?token=YOUR_APIFY_TOKEN - Authentication: Predefined Credential (Apify API)

- Headers:

[ { "Content-Type": "application/json" } ] - Body (JSON):

{ "from": "realDonaldTrump", "twitterContent": "war OR conflict OR Iran OR Israel OR military OR missile OR attack OR sanctions OR diplomacy OR peace OR nuclear OR troop OR battlefield OR strike OR invasion OR ceasefire OR missilestrike OR defense OR escalation", "maxItems": 500, "queryType": "Top", "lang": "en" }

💡 Tip:

- You can customize

twitterContentto match your niche. Example: “AI OR ChatGPT OR LLM OR machine learning” for AI trends, or “Olympics OR gold medal OR Paris 2024” for sports updates. - You can also change the

fromfield to scrape tweets from any person you want (e.g., kanyewest). - You can change the

queryTypeto any supported type, such as latest, top, or mixed depending on your needs.

datasetId after the actor runs. We captured this ID using an n8n Set or Function node for use in the next step.

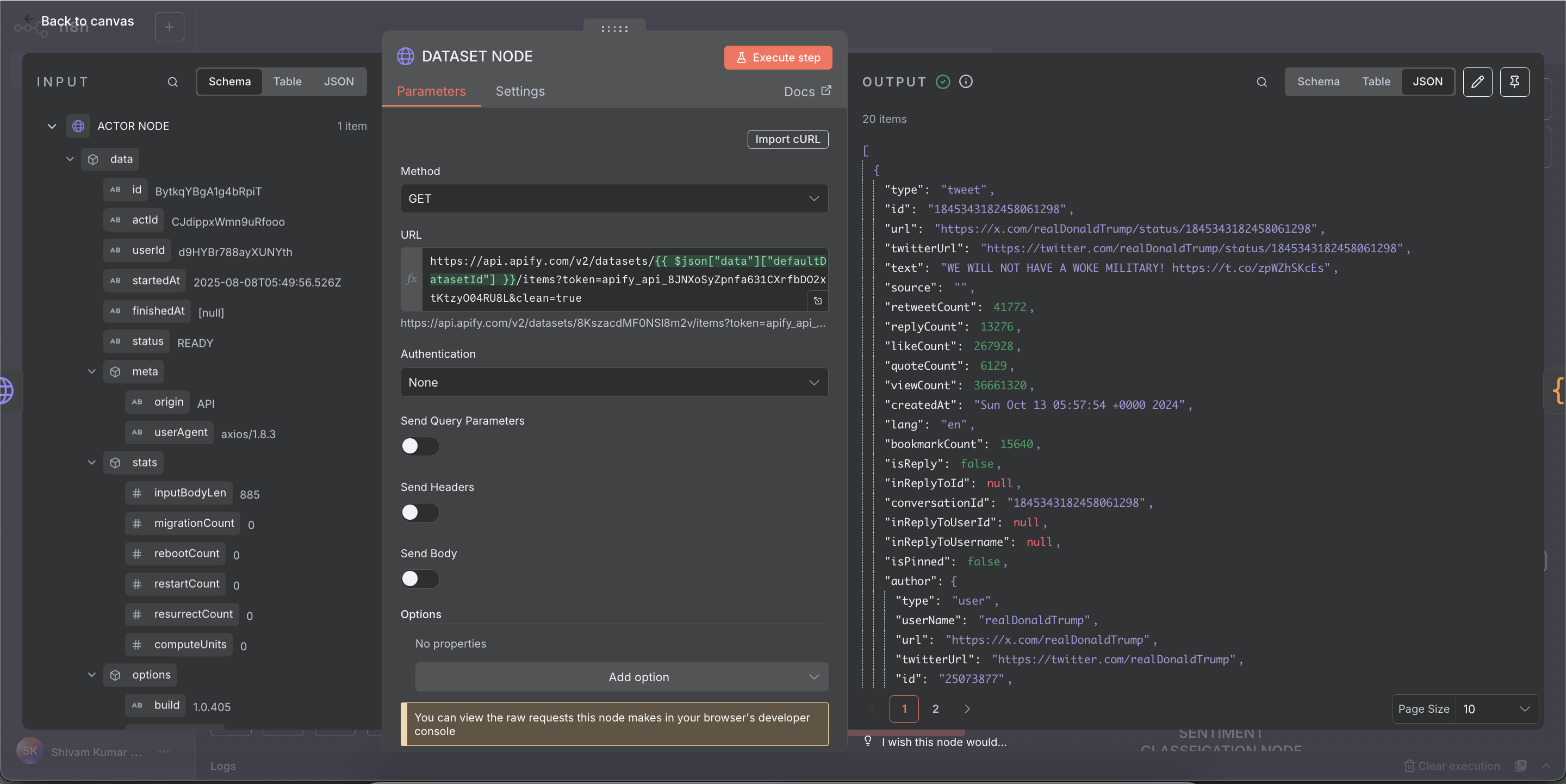

- Method:

GET - URL:

https://api.apify.com/v2/datasets/{{ $json["data"]["defaultDatasetId"] }}/items?token=YOUR_API_TOKEN&clean=true - Authentication: None (API token included in URL)

- Headers: None

- Body: None

const items = $input.all();

const transformedItems = items.map(item => {

const { id: originalId, ...rest } = item.json;

const numericId = Number(originalId);

const newId = numericId === -1 ? Math.floor(Math.random() * 1000000000) : numericId;

return { id: newId, ...rest };

});

return transformedItems;

Sometimes, if the actor doesn’t find real tweets (due to errors or no matches), it sends a mock tweet with id = -1. This code handles that by replacing -1 with a random unique ID.

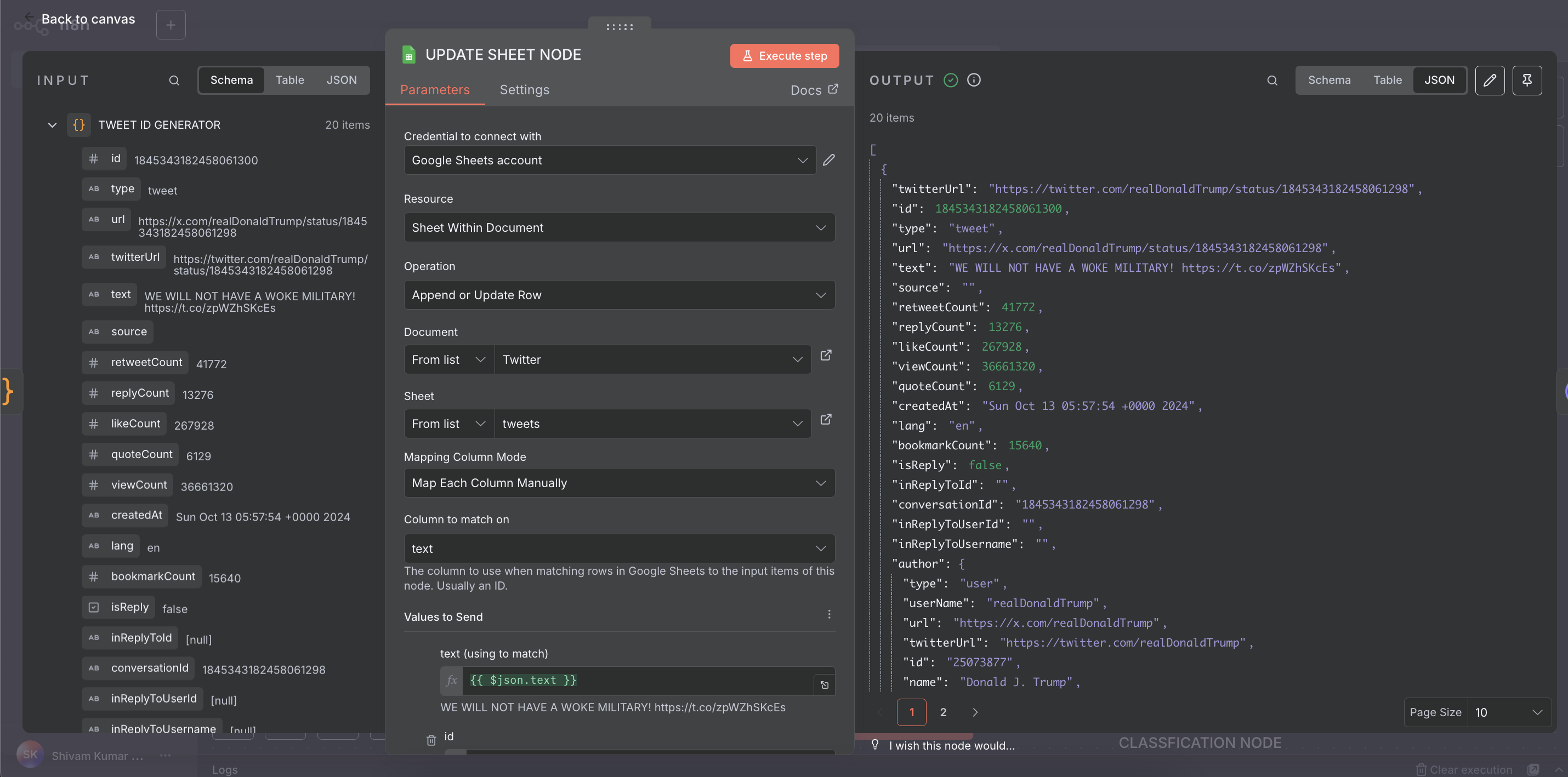

- Credential: Connected Google Sheet account

- Resource: Sheet Within Document

- Operation: Append or Update Row

- Document:

Twitter - Sheet:

tweets - Mapping: Mapped each tweet field manually to columns

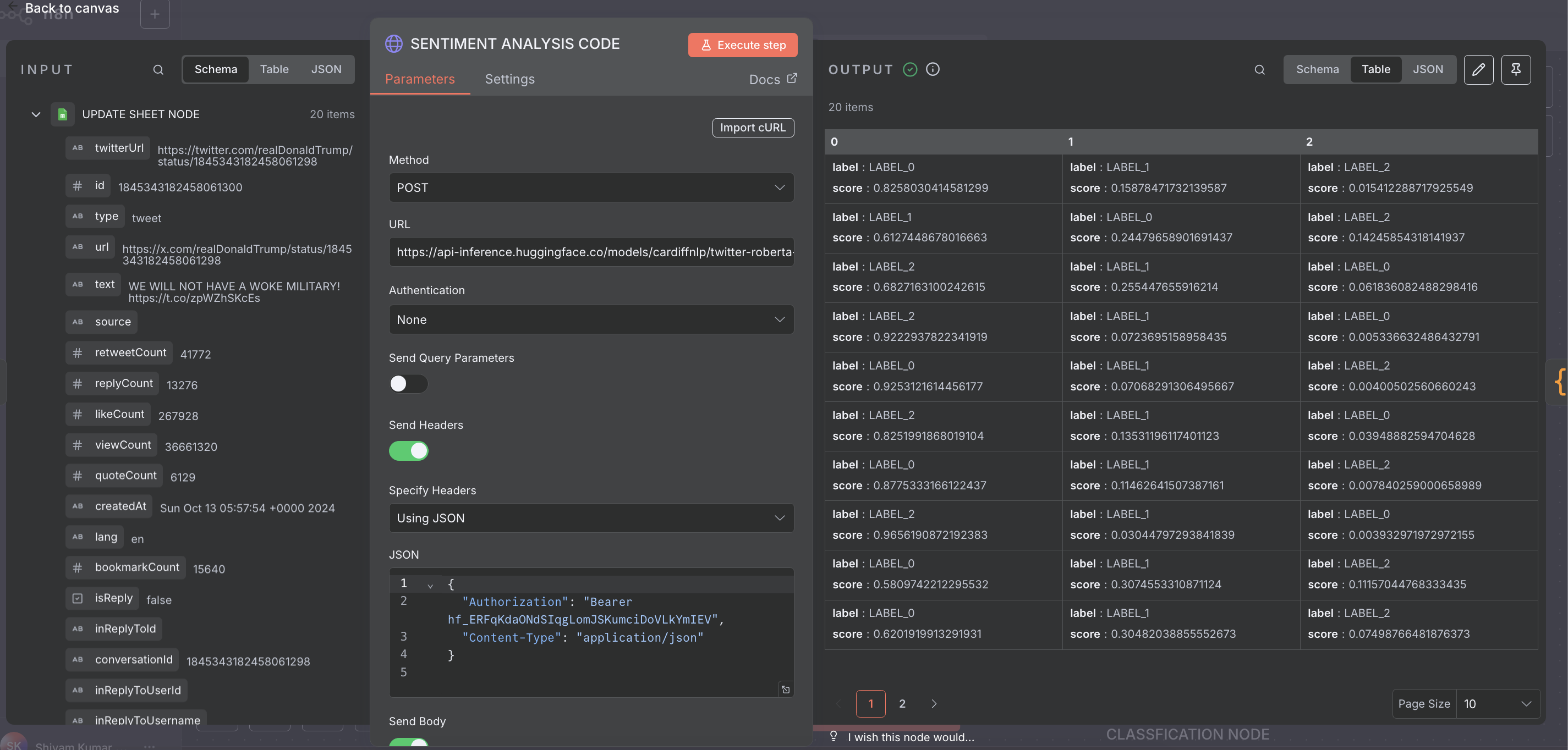

cardiffnlp/twitter-roberta-base-sentiment model via an HTTP Request node.

HTTP Request Configuration:

- Method:

POST - URL:

https://api-inference.huggingface.co/models/cardiffnlp/twitter-roberta-base-sentiment - Headers:

[ { "Authorization": "Bearer YOUR_HF_API_KEY" }, { "Content-Type": "application/json" } ] - Body (JSON):

{ "inputs": "{{ $json.text }}" }

💡 Tip:

You can try changing the model to another one, like distilbert-base-uncased-finetuned-sst-2-english, for faster results while maintaining good accuracy. Feel free to explore other Hugging Face models to find the best fit for your needs!

LABEL_0, LABEL_1, and LABEL_2, which correspond to negative, neutral, and positive sentiments respectively.

To convert these into more understandable terms, we used an n8n Code node with the following JavaScript code:

// Process all input items

const items = $input.all().map(item => {

// Create a copy of the original JSON data

const newItem = {...item.json};

// Calculate sentiment if not already present

if (!newItem.sentiment) {

const labelMap = {

"LABEL_0": "negative",

"LABEL_1": "neutral",

"LABEL_2": "positive"

};

// Get all available results

const results = [];

['0', '1', '2'].forEach(key => {

if (newItem[key]) {

results.push(newItem[key]);

}

});

// Determine sentiment if we have results

if (results.length > 0) {

const top = results.reduce((max, current) =>

(current.score > max.score) ? current : max,

{score: -Infinity}

);

newItem.sentiment = labelMap[top.label] || 'unknown';

} else {

newItem.sentiment = 'unknown';

}

}

return {json: newItem};

});

return items;

This Code node reads each item’s label, maps it to a clear sentiment category, and adds a new sentiment field with the result.

sentiment value back to the same Google Sheet, giving us a final dataset with both tweet data and analyzed sentiment.

Here Is the Sheets Output Result

The scraped tweets along with their analyzed sentiments are neatly organized in Google Sheets, ready for review or further analysis.

Complete Workflow of n8n Automation

Below is the full visual diagram of our n8n workflow demonstrating how all steps are connected seamlessly.

Resources & References Used in This Blog

We’ve used several models, tools, and resources while writing this blog. Here’s a handy list for you:

Thank You for Following Along!

We hope this guide helped you understand how to build a powerful sentiment analysis workflow using n8n, Google Sheets, Apify, and Hugging Face.

Whether you’re tracking public feedback about your brand, analyzing product reviews, or monitoring online hype — this automated setup can save you hours of manual work and give you actionable insights in real time.

If you have any questions, want to expand this use case further, or need help setting up a similar automation for your business, feel free to reach out.

We at Parsedom are always here to help bring your automation ideas to life — with smart, scalable, and no-code solutions.

Need Custom Solutions?

Mail us at info@parsedom.com